Convolutional Neural Network

Neural networks are a part of machine learning, and they form the core of deep learning algorithms. Convolutional Neural Network (CNN) have transformed the world of computer vision and image processing. These dedicated neural networks are structured to learn spatial hierarchies of features from input images automatically and adaptively. In contrast to ordinary neural networks that can accept entire inputs with fully connected layers, CNNs employ stacks of convolutional layers that apply filters on local neighborhoods of the input and are therefore particularly well-suited for processing pixel data.

How Do Convolutional Neural Networks Performs?

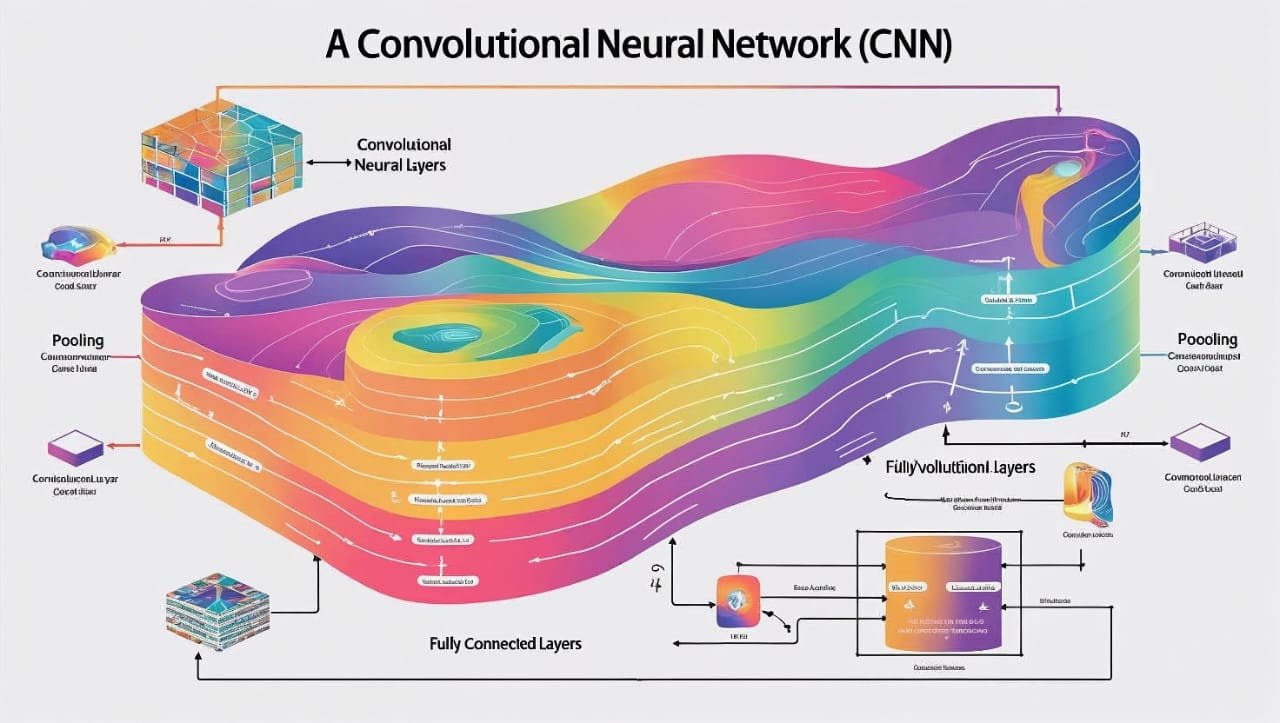

Convolutional neural networks have only one important feature that makes them different from other neural networks. They feed either new images or old audio signals into the network. It’s typical for convolutional neuron networks to have three types of common types of the layer:

- Convolution Layer

- Pooling Layer

- Fully Connected (FC) Layer

A convolutional layer is the first layer of a convolutional network. Designers may follow convolutional layers with another convolutional layer or a pooling layer, while they typically place a fully connected layer at the end of any convolutional network. Subsequent layers increase the complexity of the CNN by collecting more substantial parts of the image to enhance understanding. In early layers the model uses basic features such as color and edges. The object emerges as the image advances through the layers of CNN, recognizing smaller attributes or shapes until finally the expected object.

Types of Convolutional Neural Network

Kunihiko Fukushima and Yann LeCun established the basis of research on convolutional neural networks in their work in 1980 and “Backpropagation Applied to Handwritten Zip Code Recognition” in 1989, respectively. More notably, Yann LeCun was able to apply backpropagation to train the neural networks to recognize and classify patterns within a sequence of handwritten zip codes. He would carry on his work with his team in the 1990s, culminating in “LeNet-5“, applying the same principles of earlier work to document recognition. Since that time, a series of variant CNN architectures have appeared with the advent of new datasets, like MNIST and CIFAR-10, and challenges, like ImageNet Large Scale Visual Recognition Challenge (ILSVRC).

Some of the viral convolutional neural network includes :

1. LeNet-5 (1998)

One of the earliest successful CNN architectures designed for handwritten digit recognition.

- “Researchers developed LeNet-5 as the classic CNN architecture.”

2.AlexNet (2012)

Won the ImageNet competition and popularized CNNs in computer vision.

3.VGGNet (2014)

Showed that depth is a critical component for good performance.

4. ResNet (2015)

Introduced residual connections that enable training of very deep networks.

5. EfficientNet (2019)

Provides state-of-the-art accuracy with much better efficiency.

Applications of Convolutional Neural Networks

Convolutional neural networks have found widespread applications in many domains. In computer vision, they are critically applied in facial recognition, self-driving cars, and medical image analysis. Additionally, CNNs are increasingly being applied to areas such as natural language processing and video classification, demonstrating their usability beyond the realm of image data. With continued advancements in technology, the applications of convolutional neural networks will continue to broaden, spurring breakthroughs in many fields.

Convolutional layers

In a CNN, the input is a tensor with the following shape:

(number of inputs) × (input height) × (input width) × (input channels)

A convolutional layer abstracts the image into a feature map, also called an activation map, with shape:

(number of inputs) × (feature map height) × (feature map width) × (feature map channels).

Convolutional layers convolve the input and forward its output to the succeeding layer. This is analogous to a neuron in the visual cortex responding to a certain stimulus.[23] Every convolutional neuron computes data only for its receptive field.

Hyperparameters

You must set three important hyperparameters that affect the output volume size before training the neural network. These include:

1. The depth depends on the number of filters. For instance, three different filters would produce three different feature maps, forming a depth of three.

2. Stride refers to the distance, or quantity of pixels, that the kernel travels along the input matrix. Although strides greater than or equal to two are not common, a higher stride produces a reduced output.

3. Zero-padding is typically employed when filters do not fit into the input image. This places all values outside the input matrix at zero, resulting in a bigger or same-sized output. There are three forms of padding:

Valid padding: Also known as no padding. The last convolution is omitted here when dimensions are not aligned.

Same padding: This padding makes the output layer identical in size to the input layer.

Full padding: This padding adds zeros to the border of the input to make the output larger.

A CNN performs a Rectified Linear Unit (ReLU) transformation on the feature map after every convolution operation, adding nonlinearity to the model.

Additional convolutional layer

As mentioned before, a follow-up convolution layer can be added after the first one. Such a kind of architecture makes the CNN hierarchical since the input to later layers comes from pooled pixels from input received in previous layers. For instance, consider the problem we are trying to solve, whether the given image has a bicycle or not. You can think of a bicycle as having parts; a bicycle has a frame, handlebars, wheels, pedals, and so on. Every part in lower levels of the neural net forms a pattern at a lower level in the neural net, and all these parts create a higher-level pattern and represent a feature hierarchy in the CNN. In the end, a convolutional layer converts this image into numbers, which can be recognized and transformed by an artificial neural network.

Pooling layers, also known as downsampling, conducts dimensionality reduction, reducing the number of parameters in the input. Similar to the convolutional layer, the pooling operation sweeps a filter across the entire input, but the difference is that this filter does not have any weights. Instead, the kernel applies an aggregation function to the values within the receptive field, populating the output array.

Types of pooling :

Max pooling:

As the filter moves across the input, it selects the pixel with the maximum value to send to the output array. Researchers tend to use this approach more often than average pooling.

Average pooling:

As the filter moves across the input, it calculates the average value within the receptive field to send to the output array. While a lot of information is lost in the pooling layer, it also has a number of benefits to the CNN. They help to reduce complexity, improve efficiency, and limit risk of overfitting.

Fully Connected Layer

The full-connected layer name is descriptive enough. As discussed, the input image pixel values are not connected with the output layer directly in partially connected layers. However, in the fully connected layer, every node in the output layer directly connects to a node in the preceding layer.

This layer performs the task of classification from the extracted features by the layers above and their varying filters. While convolutional and pooling layers tend to use ReLu functions, FC layers usually leverage a softmax activation function to classify inputs appropriately, producing a probability from 0 to 1.

Advantages of CNNs

Automatic Feature Extraction: Learns features automatically without manual engineering

Spatial Hierarchy: Captures spatial hierarchies in data through local connectivity

Parameter Sharing: Reduces number of parameters through shared weights in convolutional layers

Translation Invariance: Can recognize patterns regardless of their position in the image

Challenges and Limitations

Computationally Intensive: Especially for high-resolution images

Large Datasets Required: Typically need lots of labeled training data

Black Box Nature: Difficult to interpret how decisions are made

Fixed Input Size: Most implementations require fixed-size inputs

FAQ’S

What is a convolutional neural network?